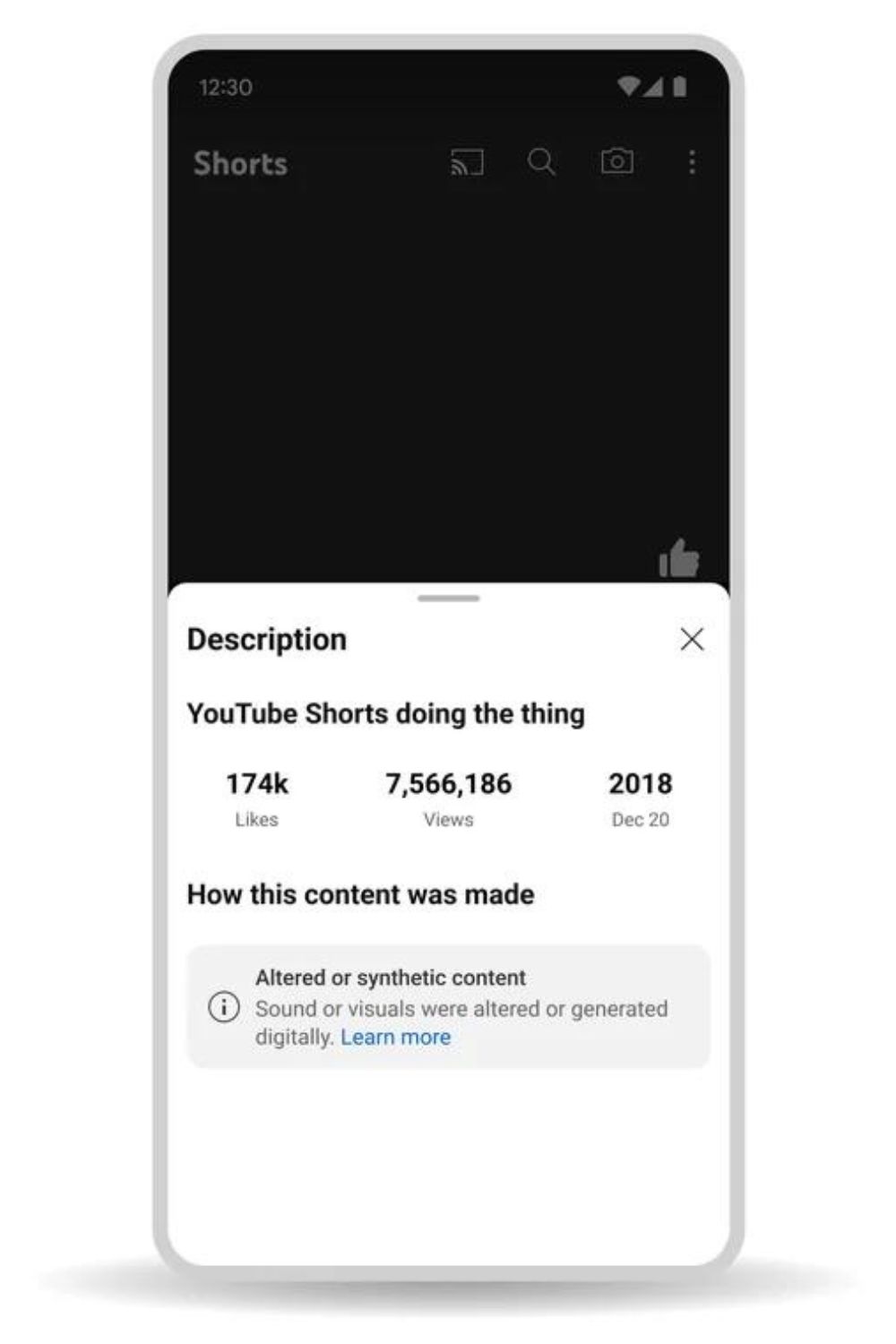

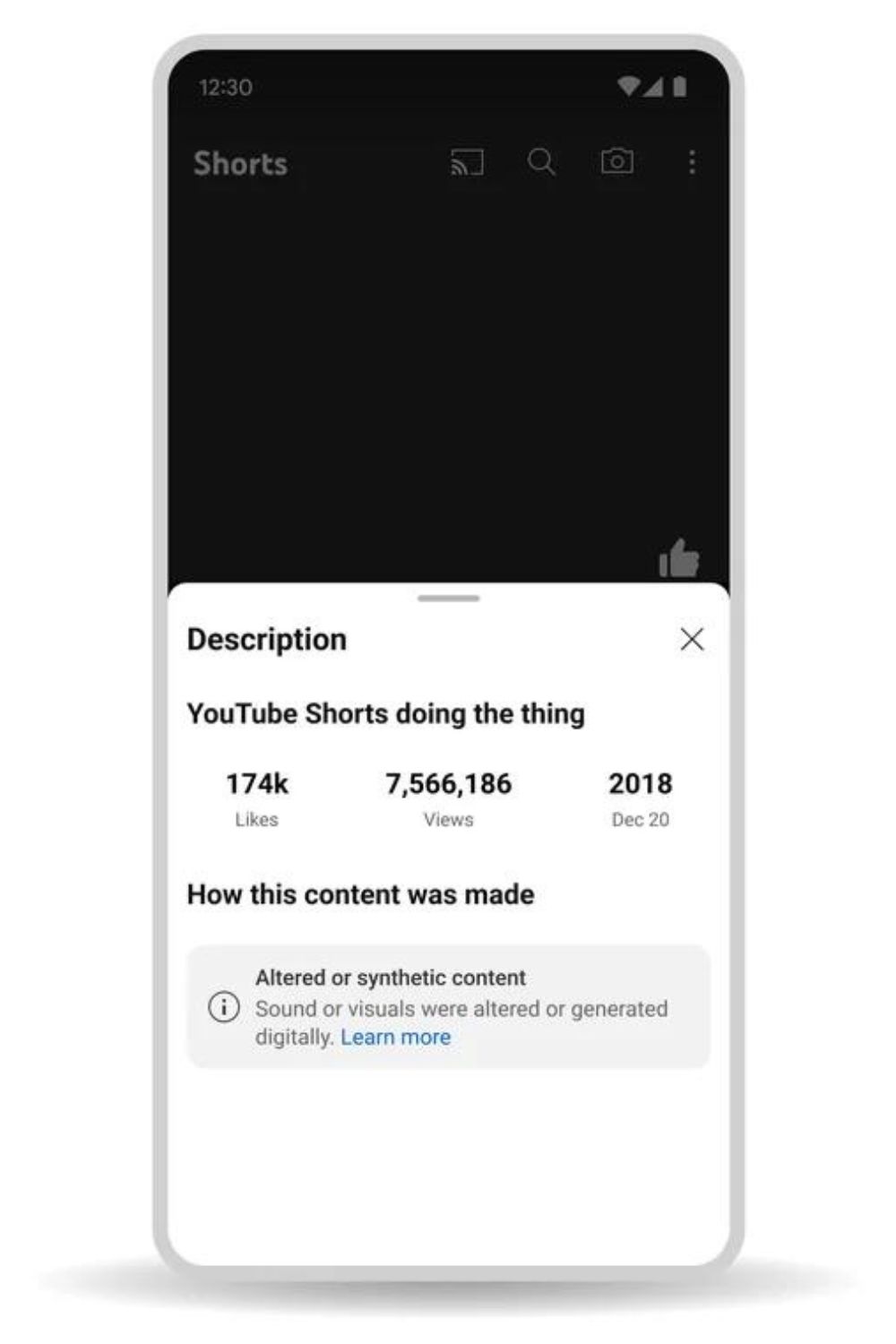

In the coming months, YouTube will require creators to label AI-generated content on its platform, and the platform will alert users when they watch AI-generated content. Also, YouTube will allow people to request the removal of manipulated video “that simulates an identifiable individual, including their voice or face.”

YouTube said in a blog post that not all content will be removed, and a variety of factors will be taken into account when reviewing these requests. The content could be parody or satire, identifiable by the person making the request, or featuring a public official or well-known individual, in which case there may be a higher bar.”

A similar request removal process will be implemented by YouTube for its music partners when an artist’s voice is mimicked for AI-generated music. Labels or distributors representing artists in YouTube’s “early AI music experiments” will be able to submit these requests first, and then additional artist representatives will be able to submit requests.

In addition to using AI in content creation and moderation, YouTube is also expanding its own capabilities in this area.

“One clear impact of the program was the identification of novel forms of abuse,” the company said. We are unable to understand and identify emerging threats at scale when they emerge because our systems lack context. By using generative AI, we can rapidly expand the set of information our AI classifiers are trained on, so we can detect and catch this content more quickly. Our algorithms’ increased speed and precision also enable us to limit the quantity of hazardous content that human reviewers are exposed to.

Read more: “Elon Musk Reveals New Innovation” Humanoid Tesla Bot

YouTube stated in late 2020 that using greater automation in its moderation operations presented difficulties and that AI produced more errors than human reviewers.

The same community guidelines that forbid “technically manipulated content” that deceives viewers and endangers others also apply to AI content on YouTube. There will be a label displayed in the video player itself for certain of the videos, especially those that deal with “sensitive topics,” including “elections, ongoing conflicts, public health crises, or public officials.”

An AI film that “realistically depicts an event that never happened, or content showing someone saying or doing something they didn’t actually do,” according to YouTube, will be covered by the policy.